Let’s say we’re on the Data Science team at a bike rental company. The Design team strongly believes changing the color of the bikes from blue to red will boost key metrics, like number of rentals and revenue. Management agrees, and over the next week, all of the bikes are repainted. After a few days, the daily rentals are up 1% but revenue is down 5%.

What to do now? Management wants revenue back up immediately, but Design insists the look is better and Operations is happy that daily rentals are up. Marketing points out that it rained for most of the week but it was a holiday weekend, and asks if that might have had an effect? In the end, the most persuasive and highest-ranking people win the debate and the bikes are all re-painted to the original color, but everybody walks away bitter and confused.

This kind of scenario plays out all the time, but it doesn’t need to. Experiments are designed to give us the solid data we need to estimate the impact of a proposed change and avoid making decisions based only on personal intuition.

So why doesn’t every company test every change right from the start? Because, despite the simplicity of the core concepts, experimentation is hard to do reliably. It requires meticulous planning and implementation of dozens of design, statistical, and engineering details. It requires ongoing commitment and collaboration between many functional teams and business units. And most importantly, it requires a deep commitment to a data-driven decision-making culture, especially from senior leaders.1

Experiment Platforms to the Rescue!

To build a culture of reliable experimentation, you need an experiment platform. Done well, these tools reduce friction and errors at each stage of the experiment process. At the absolute minimum, your experiment platform should:

assign subjects (e.g. users, sessions, markets) to variants…correctly, so that—for example—the same subject doesn’t end up receiving both variants accidentally

persist subject-variant assignments for the duration of the treatment window and route subjects at run-time to the assigned variant

allow experiment owners to start and stop trials in case of bugs or extreme results

make subject-variant assignments available to the data warehouse for downstream analysis

Good experiment platforms go beyond the minimum functionality in many ways. Among other things, they may:

act as a repository for experiment artifacts like flight plans, analyses, and decisions

guide experimenters to the most promising ideas to test, based on past results

allow experiment protocols other than simple A/B testing. Crossover, switchback, bandit, and staged rollout protocols (among others) have advantages over A/B designs in relevant situations

templatize the planning process. The flight plan template might, for example, remind experimenters to think ahead of time about the form of the hypothesis—difference, superiority, or non-inferiority—to prevent sloppy analysis and decision-making downstream

allow experimenters and engineers to test experiments before they launch, or inspect them while they’re running

control when and how subject-variant assignments are propagated to relevant services

automatically halt experiments when guardrail metrics indicate problems

automate the analysis for standard protocols, to save time and to prevent statistical misbehavior like early peeking and p-hacking.

In a virtuous cycle, the growing reliability of experimentation and the falling cost prompts more teams across the company to depend on the platform for testing and rolling out new ideas. Before long, a good experiment platform reinforces the culture of rapid, evidence-driven innovation.

OK, I’m convinced. What are my options?

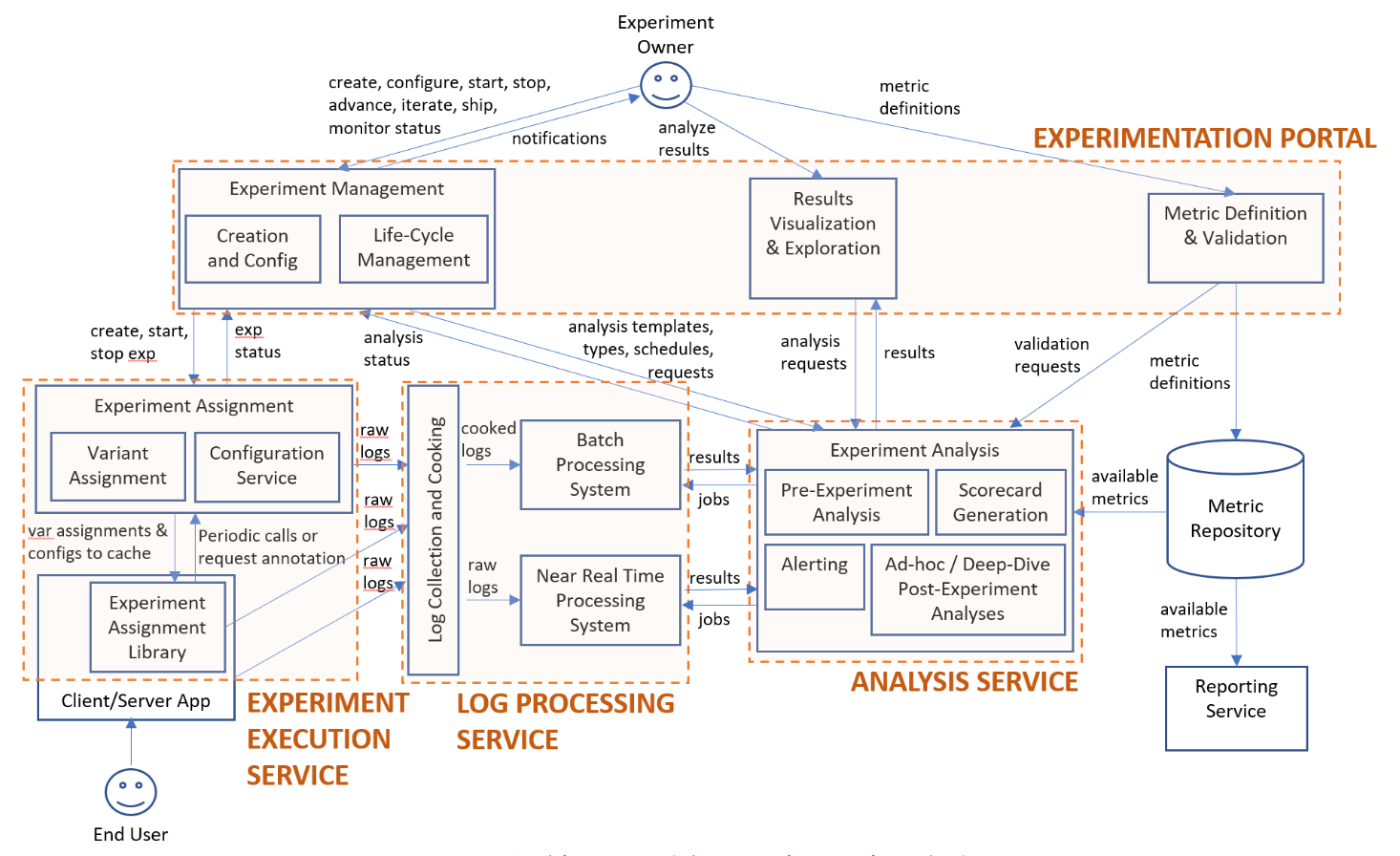

The first question is whether to build or buy. Many companies write extensively about their experiment platforms; Netflix, Uber, Microsoft, and Stitch Fix are well-known examples. Going the DIY route means you can build exactly the functionality you need and bake your opinions and culture directly into the platform. A 2018 paper by Microsoft shows the architecture of their system.

Building a system like this is very hard and the consequences of mistakes can be severe. A recent New York Times blog post illustrates how tricky it can be to implement something as simple as subject-variant assignment, and how getting it wrong means making bad decisions for years. Avoiding these kinds of mistakes requires dedicated resources from DevOps, Data Engineering, and Data Science, and giving them sufficient time to build; the cost adds up quickly.

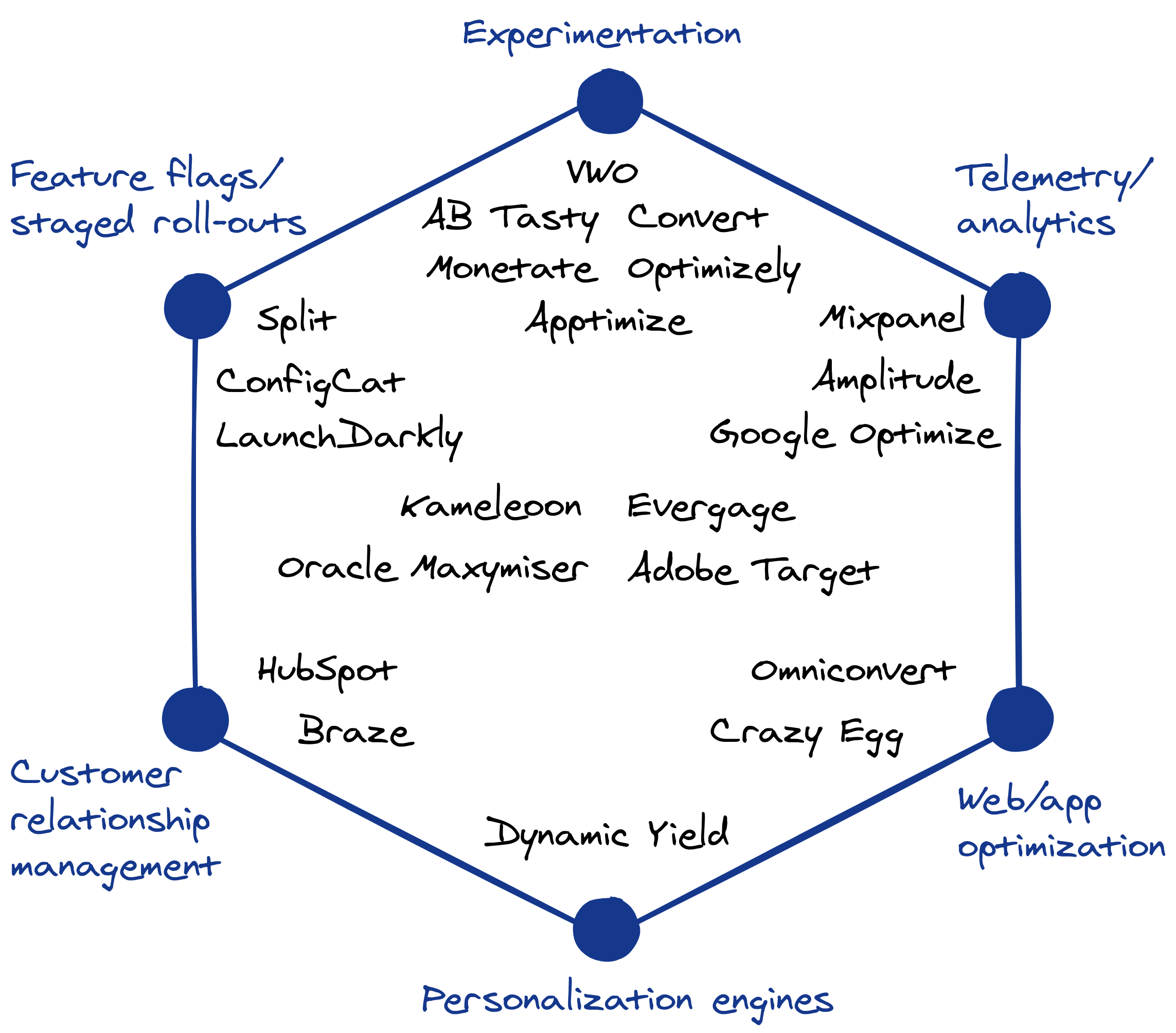

If you decide to buy, the next step is to figure out which platform is the best fit for your needs. This has gotten harder to do in recent years, as platforms from other domains have added A/B testing, while some mainstream experiment platforms have evolved their messaging to emphasize “digital experiences” and conversion rates.

Roughly speaking, experiment platforms can be categorized according to their original use case, which is somewhat of a proxy for their feature sets.

The first category is the mainstream, general-purpose experimentation platforms. VWO is a good example; its website headline reads “Fast growing companies use VWO for their A/B testing”.2 This is not always crystal clear, however. As we noted above, Optimizely—historically in this category—now emphasizes digital experiences after its merger with Episerver.

The second group is platforms that emphasize experimentation, but only within a particular functionality. This includes feature flag systems like Split.io and LaunchDarkly as well as customer relationship tools. Braze, for example, has a tool for bandit-style optimization of email campaigns that’s very nice, but it can’t be used to control a new app feature launch.

The third group is telemetry and analytics platforms that have added A/B testing functionality. Mixpanel’s headline, for example, reads “Build Better Products. Powerful self-serve product analytics to help you convert…users”. Not much emphasis on purposeful, designed experimentation.

Finally, optimization-oriented platforms share the same goal as experiment platforms, but suggest more automated solutions with personalized content, instead of using experiments to measure average effects. Dynamic Yield falls in this category; their site reads “We help companies quickly build and test personalized, optimized, and synchronized digital customer experiences.” There’s a hint of experimentation, but the emphasis on personalization suggests a very different approach.

In the end, the devil is in the details, not the marketing messages. If you need to run switchback designs, for example, because you run a two-sided marketplace and can’t randomize users into treatment groups, which of these platforms can do that? Which can do it well? Which can do it better than building an in-house solution? These are the questions that needs answers before choosing your platform.

Footnotes

Stefan Thomke, 2020. Building a Culture of Experimentation. Harvard Business Review.↩︎

At least one variant of VWO’s website anyway. Refreshing the page caused me to switch to different variants of the site, presumably (and reassuringly) a result of ongoing experiments.↩︎