Inspired by Andrej Karpathy’s recent show-and-tell about the various ways he uses LLMs, here’s a use case I recently discovered that I haven’t seen anybody else post yet.

I recently started learning how to play the piano. One of the things I’m struggling with is hand independence, i.e. playing with both hands at the same time but with each hand doing something different. In general, I am following Bill Hilton’s excellent video series on YouTube, but he only gives a couple exercises per lesson and he does not give exercises specifically dedicated to things like hand independence.1

I wondered if an LLM chatbot could help. Here’s what I found.

My set up

My go-to LLM for day-to-day chat and code is Anthropic’s Claude 3.7 Sonnet. I’m currently on the Pro plan, which is only important in that it means I can generate longer output. For this task, I used “Normal” mode, i.e. no inference-time reasoning.

First attempt: text only

My first approach was to ask Claude for exercises I can do to improve piano hand independence, without specifying anything about the form of the output. I expected this to work well and it did. Here’s my prompt and (most of) Claude’s response:

Pretty good! I’ve tried all of these on the piano and they seem reasonable.

Second attempt: sheet music directly from the LLM

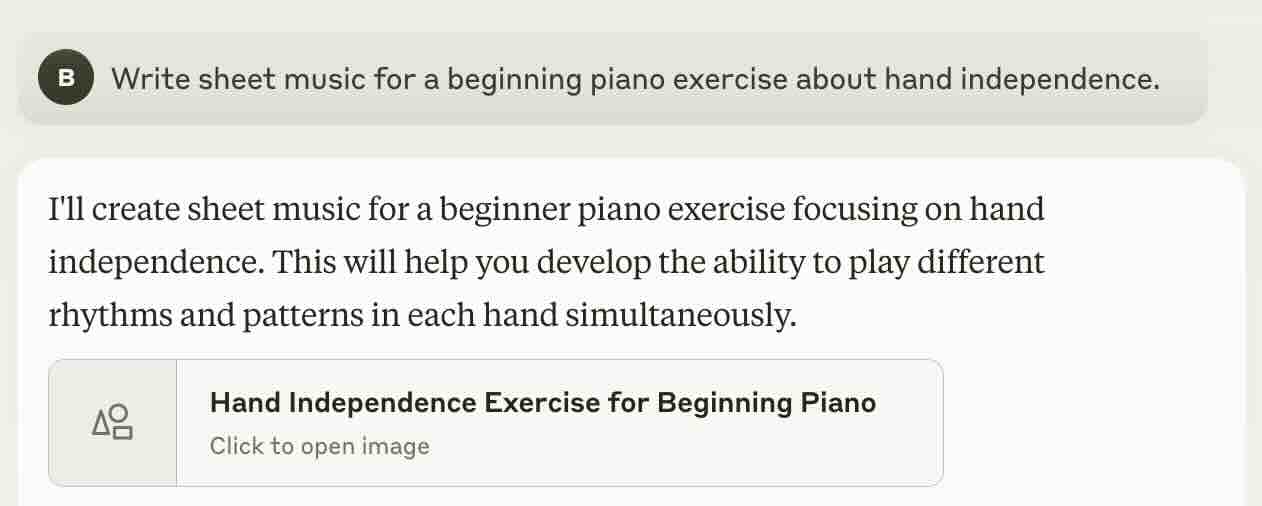

As good as the text descriptions are, it would be much nicer to have sheet music that I could print and read while sitting at the piano. Feeling lucky, I asked Claude to generate sheet music for me, without elaborating.

The results were…underwhelming. Claude drew sheet music in SVG format, which I was not expecting. On the one hand, the result is mostly legible sheet music, which is amazing. On the other hand, it looks like it was drawn by a small child.

Third attempt: Claude + MuseScore

Claude’s SVG strategy seemed silly. Piano sheet music notation follows a fairly well-defined spec and I’m asking for a very simple score, so engraving the music—drawing it on paper—should be the easy part.

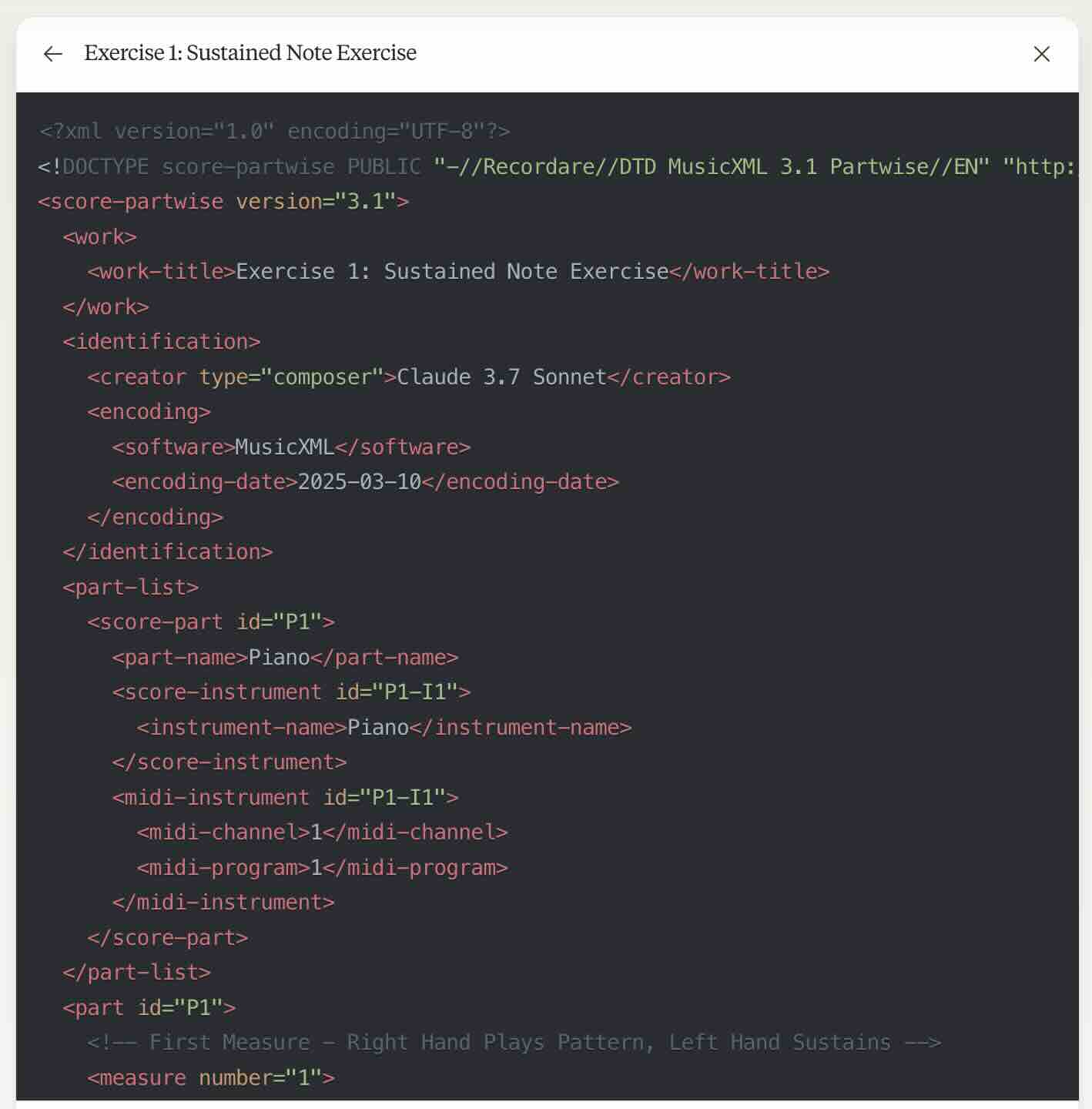

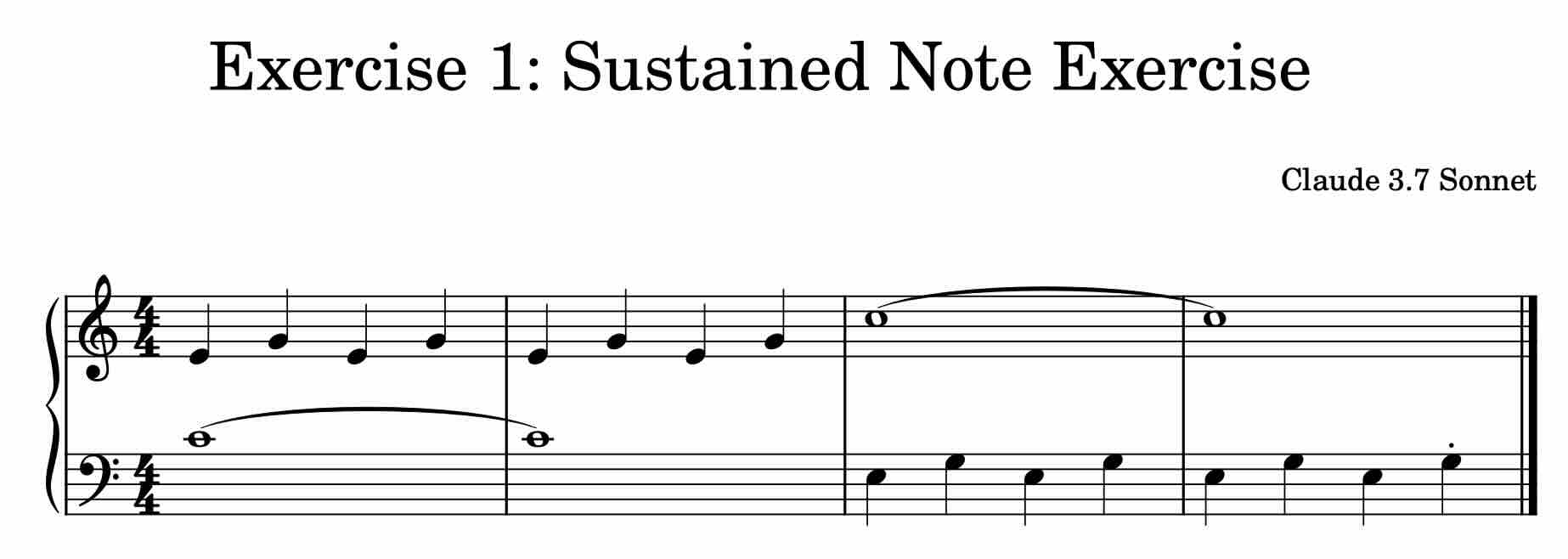

My third approach was to ask Claude to give me the exercises specifically in MusicXML format. Claude dutifully spit out well-formed MusicXML, which I uploaded to MuseScore, a free and open-source score writing tool.

Voilà—success!

An important caveat here. MusicXML is extremely verbose; even on the Claude pro plan I could not generate more than one exercise at a time before hitting the output length limit. Side note, when that happens Claude says to write “continue” in the prompt window, but that doesn’t help because Claude just regenerates the MusicXML artifact from the beginning and runs out of tokens at exactly the same spot.

ChatGPT and Gemini

Both ChatGPT and Google Gemini were able to do essentially the same thing as Claude but with some interesting differences.

When asked directly for sheet music, ChatGPT2 did not attempt an SVG drawing but it fell back to writing the note names in text format, which was pretty useless.3 ChatGPT was also able to generate MusicXML but the final rendered result was less thorough and less professional-looking than Claude’s output.

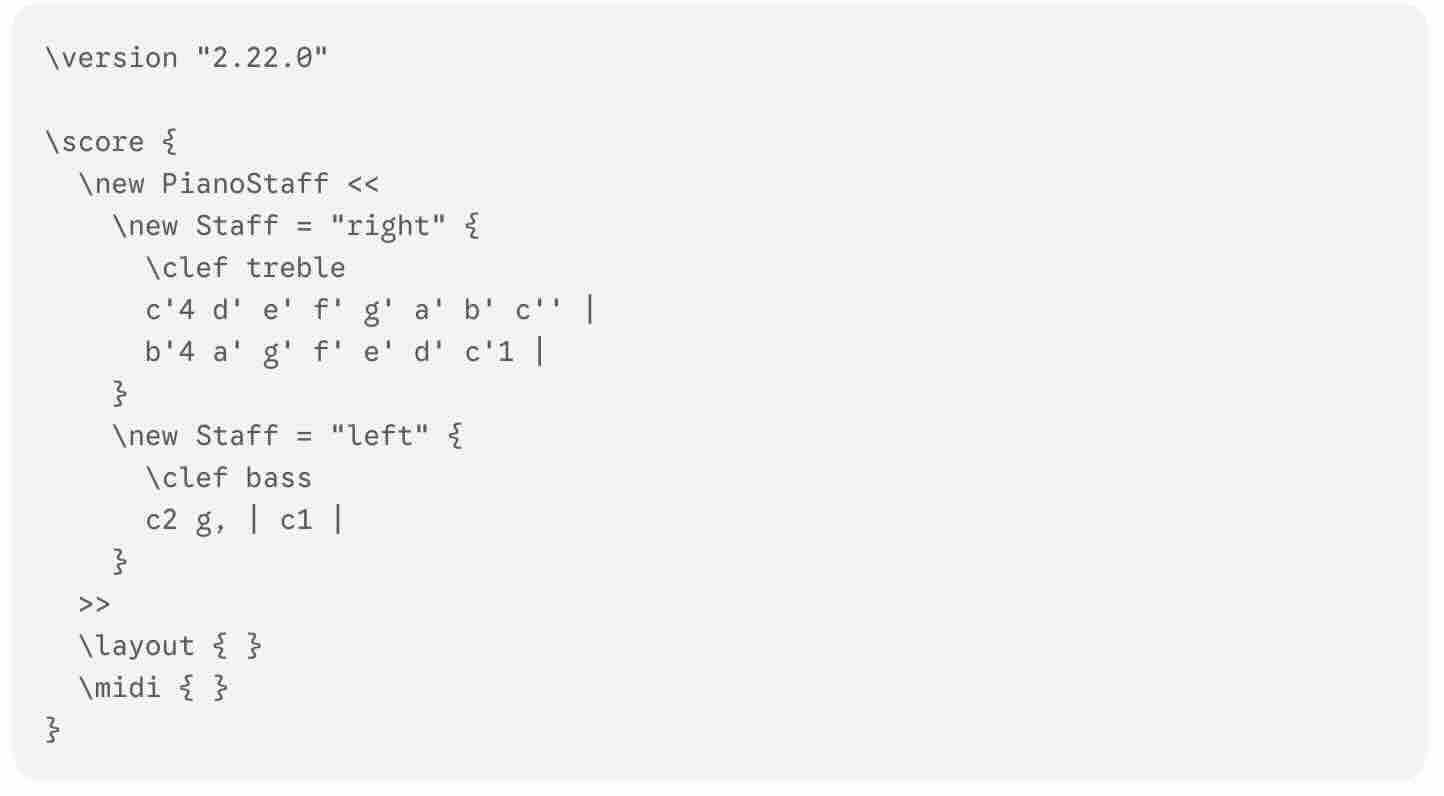

The results for Google Gemini Flash 2.0 went the other way. I could not get it to generate a complete MusicXML file, but it did a much better job when asked directly for sheet music. It gave me code for a different music engraving tool called LilyPond that uses a much more concise language, plus instructions for how to download LilyPond and import that code snippet. Impressive!

Wrapping up

Yet another use case for these incredible LLMs. In general, I still like the quality of Claude’s ouptut the best but Gemini’s suggestion of LilyPond is good—MusicXML is just too verbose for anything beyond a super simple practice exercise. I think the next step is to see if I can get Claude to write LilyPond music code.